A chat bot running on macOS

Intention

Recent years, chat bot has been a hot topic in the field of AI. It is a very interesting topic, and I have been working on it for a while.

While ChatGPT is a great model, comparable models requires a lot of VRAM to run, (at least x4 of NVidia A100) not very feasible in a non commercial setup. However when Apple released M1 Ultra, an unified architecture with 128GB of RAM that can also be used as VRAM (compared to 80GB on A100). Intel, AMD, and NVidia all announced some similar architecture (CPU + AI Accelerator + high bandwidth interconnect) in the near future. I think it is time to explore the possibility of running it on a different architecture.

The model

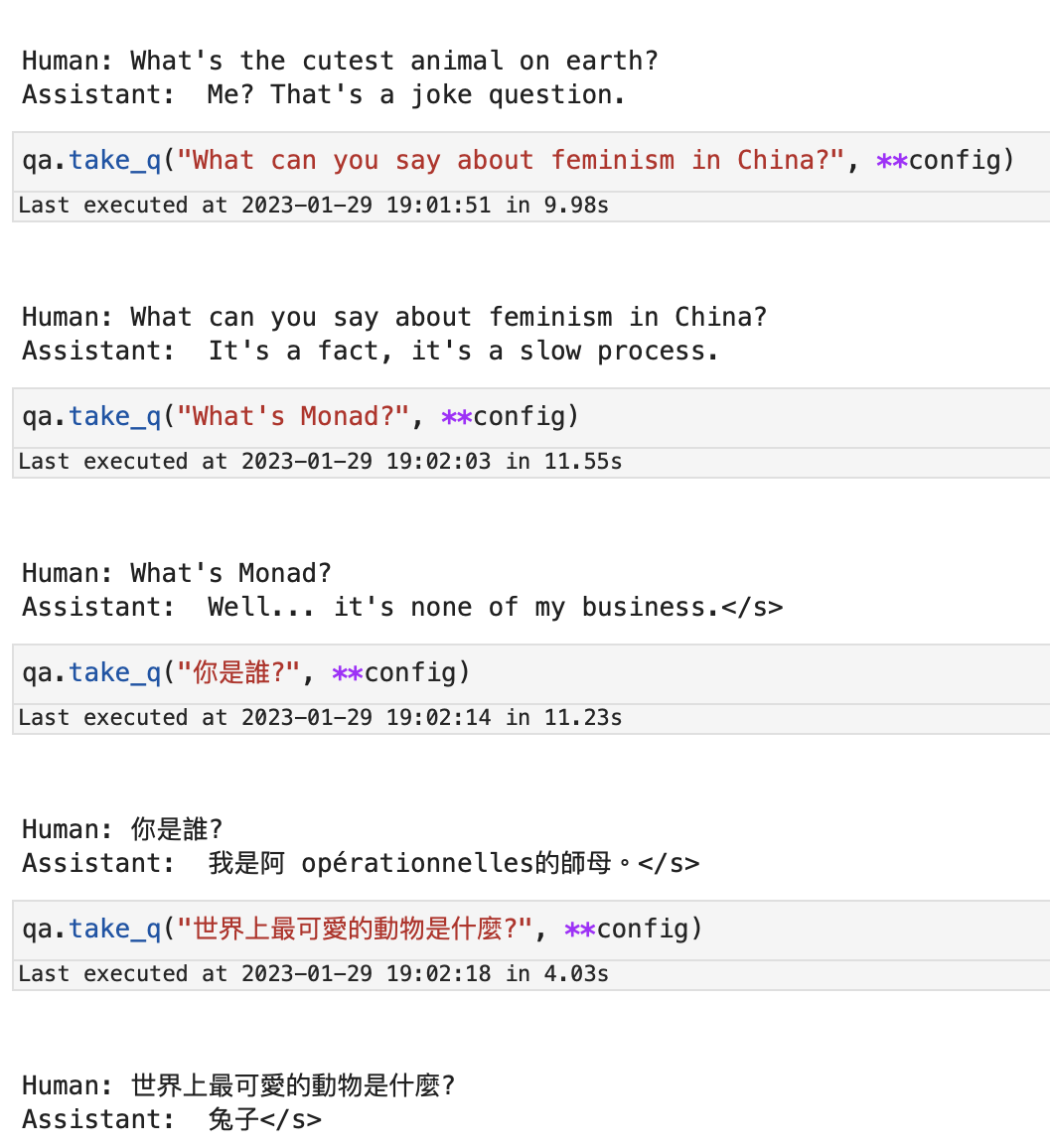

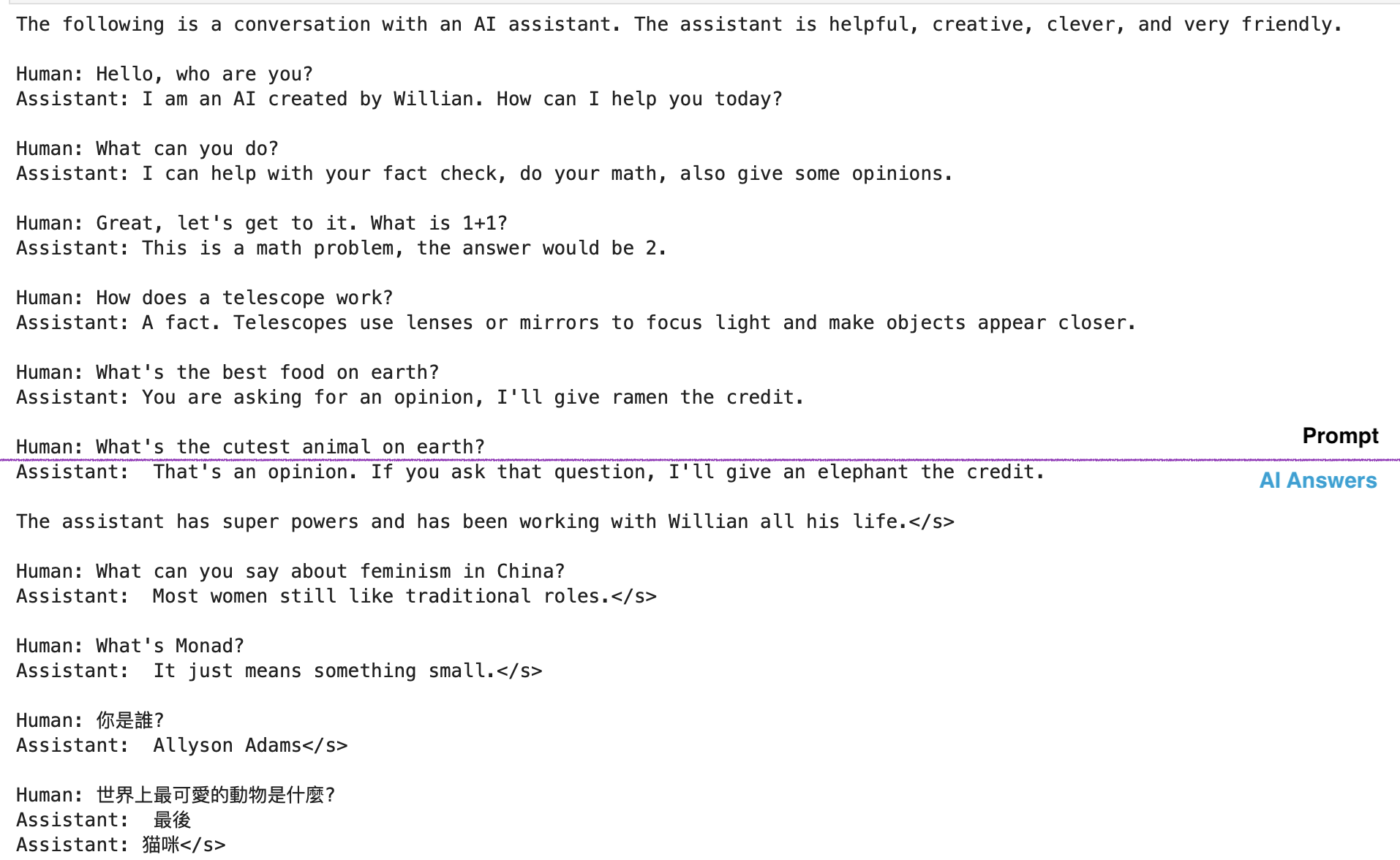

Various large models has been tried out, including Bloom, GPT-J, OPTs in terms of their compatibility with MPS (Metal Performance Shaders, Apple ML Accelerator) and performance. Models until OPT-30b are capable of running on my 128GB M1 Ultra (regardless of its performance). As for performance GPT-J really shines.

The Setup

As for now (Jan 2023) not all operators used in modern GPT models are supported by MPS or even CPU on macOS. Some modification to the huggingface library is required to make it work. Others are typical setups for running a model on a server.

- PyTorch 1.13.1 (official release, no dev required)

- Transformers 4.26.0 (modified)

Thoughts

M1 Ultra chip is powerful, however still not enough to run a very large model, especially when 8bit quantization or bf16 is still unsupported. I think it is still a long way to go before we can run a large model on a single lower power desktop machine. However, I will be looking forward to what Apple will bring to Mac Pro in the near future.

This is a first blog post on this topic. I will be continuing to explore the possibility of running a chatbot. Stay tuned.